It started innocently enough. I had an idea that seemed perfectly reasonable at the time: create an automated pipeline that would transform written character descriptions into YouTube-ready fantasy creature videos. The dream was delightfully straightforward—spend a couple of hours writing character descriptions, press a button, and watch as a dozen finished videos materialized, ready to captivate the internet (and perhaps generate that mythical beast known as “passive income”).

The creatures would be magical and whimsical, inspired by Fantastic Beasts and Where to Find Them. I’d integrate AI at every step: character generation, image creation, video assembly, narration, and music. All I’d need to do was approve the final product before uploading—or so I thought.

What transpired instead was a journey through the strange valley between AI’s capabilities and limitations, where human curation stubbornly refused to be automated away, and where the line between “innovative pipeline” and “just doing it manually” became increasingly philosophical.

Summoning Creatures from the Digital Ether

My first step was creature ideation. Gemini 1.5 Flash proved to be a surprisingly capable magical zoologist, helping me generate and refine concepts into detailed character sheets. The first success was the Lumis—a blue, monkey-like creature with a lion’s mane, falcon-like beak, and pink belly that glows different colors depending on its mood.

The character sheet contained everything from physical attributes to habitat, diet, and lore:

Physical Features: Narrow body resembling a small monkey. Long, prehensile tail with a lion’s tuft. Large, speckled golden-green eyes. Four legs with falcon-like talons. Bioluminescent glow that changes with mood: blue for calm, yellow for alert, red for threatened, purple for curious.

Habitat: Ancient Forests of Xylo, high canopies, deep caves, tropical jungles.

Lore: Considered guardians of the forest, protectors of its secrets and wonders. Lumis are said to bring good fortune to those who befriend them.

What became clear during this phase was the need to balance creativity with technical feasibility. The ethereal, transparent creatures I initially imagined would be challenging for image generators to render consistently. I needed creature concepts that were tangible enough for the AI to grasp—concrete shapes, clear color patterns, and recognizable anatomical features.

I learned that technically accurate terms like “auriculars” (a bird’s ear region) confused the AI more than helped it. The initial technical descriptions yielded inconsistent results, requiring me to rethink my approach to prompting.

The Image Generation Dance

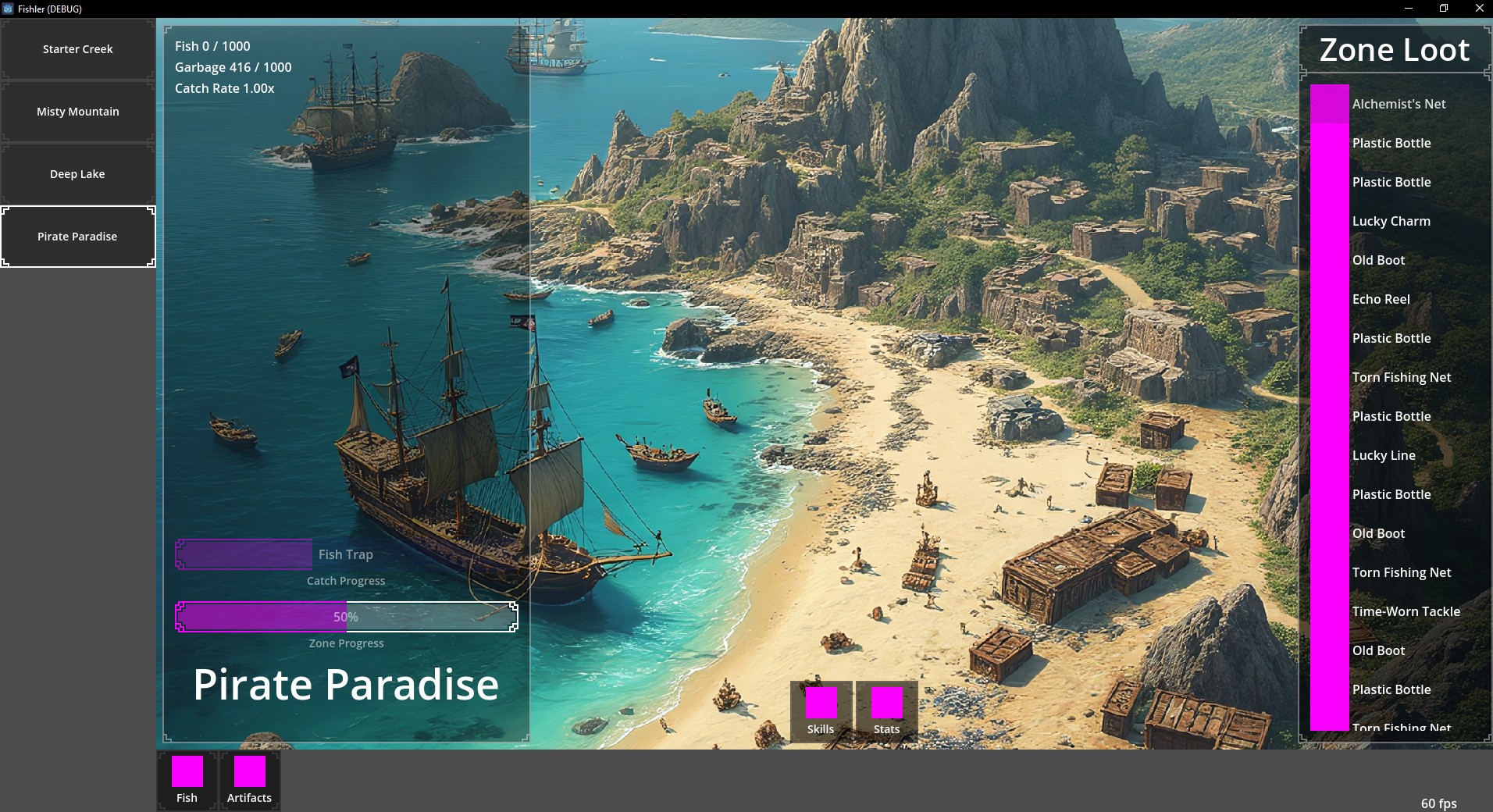

For visualizing these creatures, I landed on using Fast Flux from Runware.ai, which provided sub-second image generations—a crucial feature for the iterative process I found myself in. After writing custom Python scripts to batch-process API calls and experimenting with prompt variations, the images started flowing in by the thousands.

The results ranged from unexpectedly perfect to fascinatingly bizarre. For each successful image that captured the Lumis’s essence, there were several where the creature sprouted extra eyes, developed unconventional anatomy, or adopted an expression that could only be described as “existentially confused.”

My favorite bloopers included a Lumis with what appeared to be six eye sockets arranged in a honeycomb pattern, and another where the “auriculars” manifested as what can only be described as organic binoculars.

Some prompt variations led to more realistic, almost photographic renderings, while others produced cartoon-like interpretations. The shorter, more concrete prompts tended to yield more realistic images with detailed skin textures and sharp talons. Longer prompts that included descriptions of glows and moods often veered into whimsical territory.

Video Generation: Dreams Meet Reality

Here’s where the pipeline hit its first major obstacle. The original plan was to have fully animated creatures moving through their habitats—Lumis leaping through trees, glowing different colors as their moods changed. The reality? Local video generation was nowhere near ready for this vision.

I experimented with CogVideo X 2B locally, but the outputs were inconsistent and the model struggled with fantastical creatures. Runway ML would have been decent, but the pricing made it impractical for what was essentially a creative experiment. After weighing the options, I pivoted to using static images with programmatic panning and zooming during AI-generated narration—basically, the Ken Burns effect applied to magical creatures.

This compromise solution required manual work in CapCut, where I arranged pans and zooms to align with the narration. While it wasn’t the fully automated dream, it allowed me to complete the project and produce actual videos.

The pivot exposed an interesting lesson: when building an automated pipeline, planning each component with automation in mind from the start matters enormously. Retrofitting automation onto a manual process is significantly harder than designing for it from the beginning.

The Sound of Magic: Narration and Music

For audio, I turned to Eleven Labs for narration and Suno for instrumental background music. Finding the right voice involved a surprisingly extensive audition process. I needed that perfect documentary narrator voice—authoritative yet slightly whimsical, the kind that could describe magical creatures without sounding either too clinical or too theatrical.

After testing over a dozen different AI voices, I settled on a male baritone voice with British inflections. The selection process itself was revealing: some voices that sounded perfect for short samples became grating over longer narrations. Others handled technical terms well but lacked the warmth needed for more poetic passages. Each voice sample required testing with multiple types of content:

Technical descriptions (“The Lumis possesses bioluminescent cells that respond to emotional stimuli”)

Location descriptions (“Deep within the ancient forests of Xylo”)

Action sequences (“As it senses danger, the Lumis’s glow transforms from a tranquil blue to an alarming red”)

The winner had consistent performance across all three categories and maintained appropriate pacing and emphasis without awkward pauses or misplaced inflections.

Suno readily produced orchestral pieces with prompts like “hopeful, inspiring, adventure,” though selecting the perfect track still required human judgment. I used Gemini to generate scripts, broke them into chunks for Eleven Labs, and manually assembled the audio components in Audacity.

Again, the tension between automation and curation emerged. I could automate the generation of audio assets, but selecting the right ones remained stubbornly human. A fully automated pipeline would need to approximate subjective quality judgments, which felt like a problem several orders of magnitude more complex than the one I’d set out to solve.

The Results: Two Videos, Many Lessons

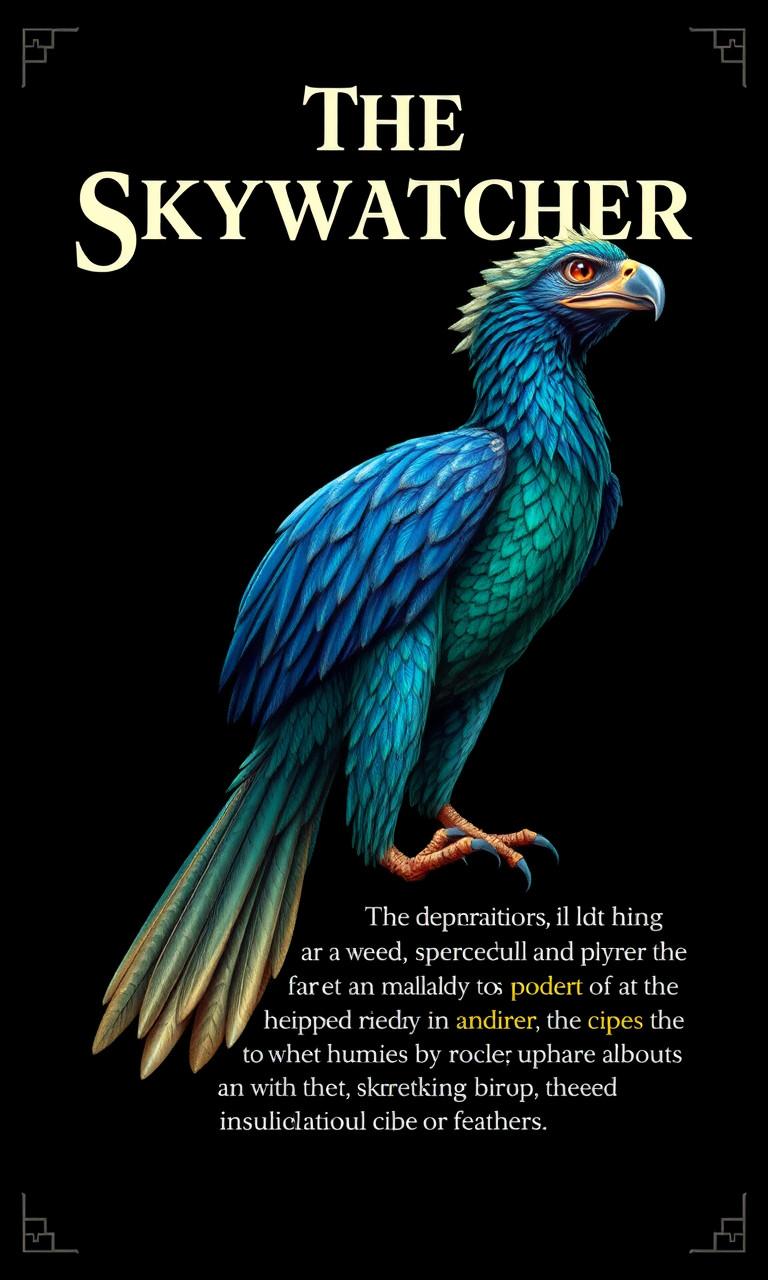

After approximately 20 hours spread across a long weekend, I had produced two videos: one featuring the Lumis and another featuring the Skywatcher (a different creature from the same project). While they weren’t the fully animated spectacles I’d envisioned, they had a charm of their own—a blend of still images with subtle motion and professional-sounding narration that managed to create a sense of magical documentary.

People who saw the videos were impressed that they were created entirely with AI, though interestingly, what impressed them wasn’t what impressed me. They saw the end product; I saw all the manual steps and curatorial decisions that the dream of automation hadn’t eliminated.

The Skywatcher creature had its own unique challenges. While the Lumis had a clear, consistent visual identity, the Skywatcher’s ethereal nature made it harder for AI to render consistently. This resulted in more diverse interpretations, some capturing the essence beautifully and others veering into unexpected territories.

What I Learned: Automation Insights

The most valuable lesson from this project was about process planning. Breaking unfamiliar workflows into automatable components needs to happen at the beginning, not as an afterthought. Converting manual steps into automated ones retroactively is tedious and often requires complete redesign.

For instance, the video panning and zooming became a sticking point. Had I designed a structured approach to timing and movement patterns from the start, I could have built automation around those parameters. Instead, I found myself trying to reverse-engineer a freestyle creative process—a task approximately as pleasant as trying to alphabetize water.

Local video generation proved unexpectedly challenging. The iteration cycle stretched from seconds to minutes, disrupting the creative flow. The quality and length limitations of locally generated video also necessitated upscaling using tools like Upscayl, introducing artifacts and quality compromises.

Perhaps most importantly, I discovered that human curation remains irreplaceable at certain steps. While you can use models to evaluate outputs (“Is this a good image of a Lumis?”), it doesn’t replace the subjective, sometimes inexplicable human sense of “this one just works better.”

Would I Do It Again?

Absolutely, but differently. I’d spend more on quality video outputs rather than trying to generate everything locally. I’d design each process step with automation building blocks from the beginning. And I’d accept that some human curation is inevitable, focusing automation efforts where they deliver the most value.

There’s something deeply satisfying about building automation, even when the manual task might take less time. Ten hours automating a five-minute process feels like time better spent than doing that process manually a few times—the difference between work and play, perhaps.

The project lives in a strange liminal space: not quite the success I’d envisioned, not quite a failure. Just like the Lumis itself—a curious creature of neither one world nor another, glowing different colors as its mood changes, occasionally sprouting an unconventional number of eyeholes.